Fitness Camera – Turn Your Phone's Camera Into a Fitness Tracker

This weekend, I attended Pioneer’s first Hackathon.

Their call to action:

Help upload the real world to the Internet. Work on something to make the experience of working on the web more fun for the billions staying at home. Overlay heart-rate on Zoom calls. Improve video conferencing quality. Build a Tandem competitor, one for friends rather than coworkers. The stage is yours.

My team of 1 had 48 hours to produce a working demo.

Ideation

Earlier this year, I played with TensorFlow Lite’s PoseNet model. I thought it had a lot of potential and I figured that this hackathon would be a good opportunity to find a use for it.

As a quantified self enthusiast, I track pretty much everything I do. While I’ve been able to automate a lot of what I track, I’ve never been satisfied with the way I track my workouts:

-

I tried manual tracking using the Strong app.

-

I tried NFC tracking using NFC tags and the Nomie app.

-

I tried voice tracking using Google Assistant on my Google Home speaker.

Could I use machine learning and a camera to automatically track my workouts? This hackathon would be my chance to find out.

You might wonder what workout tracking has to do with the hackathon’s theme of “mak[ing] the experience of working on the web more fun”. I think I might have stopped reading the prompt at “Help upload the real world to the Internet”, and decided that “the real world” would be my workout and that “the Internet” would be some cloud database. When I realized my mistake, I changed my pitch to “a way to encourage employees to be more active”.

Implementation

Improving performance

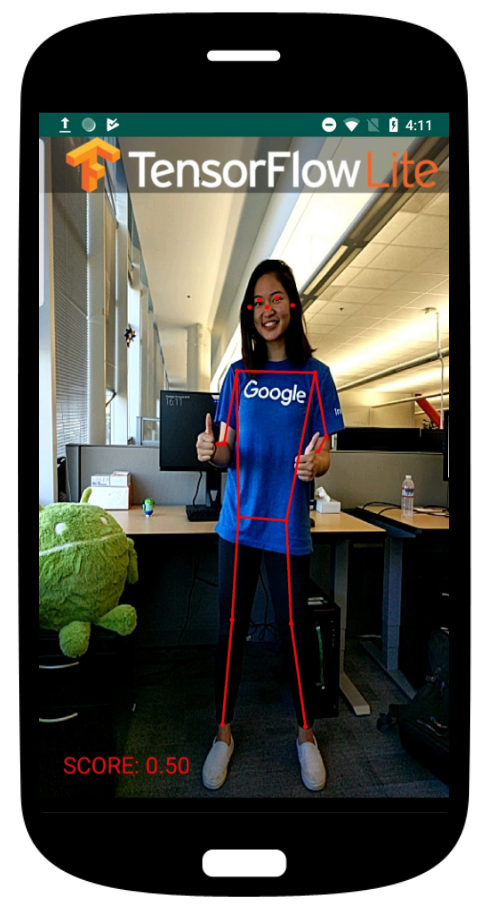

The first thing I did was to grab the TensorFlow Lite PoseNet Android Demo from GitHub. The app uses the phone’s camera to track the location of 17 distinct keypoints (feet, ankles, knees, hips, shoulders, neck, eyes, ears, elbows, and wrists), which it overlays on top of the video preview. After building it and deploying it to my phone, I noticed that the performance was terrible. On my Nokia 6.1—not exactly flagship material—the app struggled to maintain 10 FPS. That wouldn’t be fun to use or demo, so I investigated a bit.

After a couple hours of investigation, I had found and addressed 3 important performance bottlenecks. The app could now reach 25 FPS, improving performance by over 250%. While far from perfect, the app finally felt usable.

Assessing accuracy

Over the next hour, I let my phone watch me perform all kinds of exercises, at different angles, distances, and lighting conditions. I paid close attention to the accuracy of the pose estimation overlay, to understand what kinds of exercises it would and wouldn’t be compatible with. For example, it’s extremely accurate at tracking all 17 keypoints when facing the camera and doing squats. Yet, it struggles to recognize anything other than my face (nose, eyes, ears) when facing the camera and doing push-ups. Likewise, it’s very good at tracking hand movements…until you hold a dumbbell and your hand suddenly disappear.

Initially, I planned to use pose estimation and a trained classifier to detect distinct phases of an exercise. For example, a burpee could be decomposed into these 3 phases: crouching, planking, and jumping.

However, due to PoseNet’s inconsistent accuracy and the hackathon’s time constraints, I pivoted to something much simpler. Instead of classifying exercises, it would only count repetitions. To further simplify the scope, I would only support 4 bodyweight exercises: squats, push-ups, sit-ups, and pull-ups.

Counting repetitions

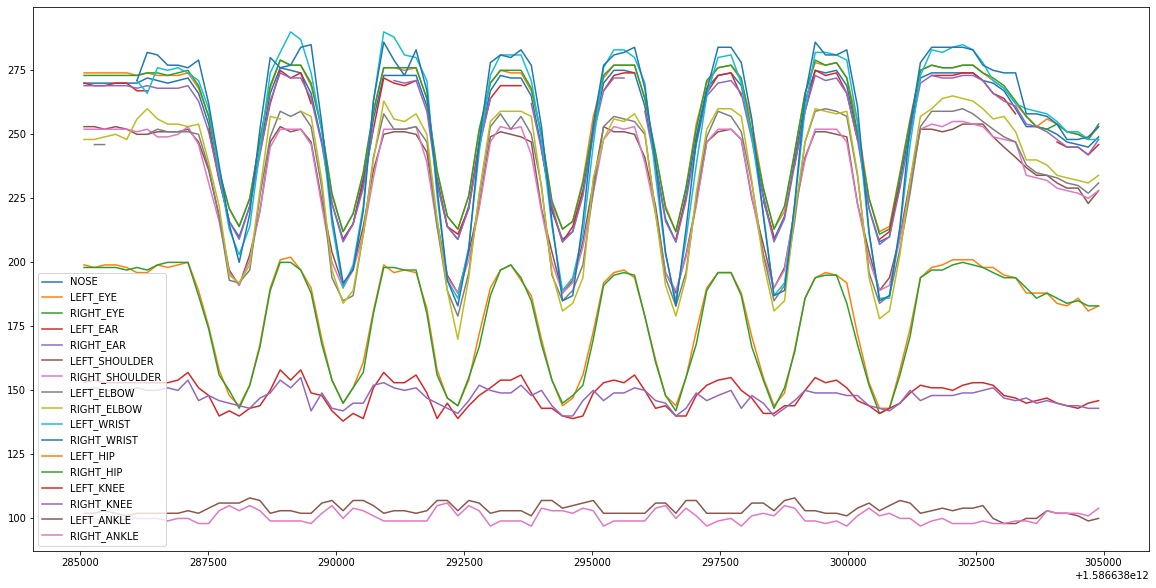

As usual, I performed exercises in front of the camera, but this time I logged all frames (snapshot of 17 keypoints) to a CSV file. I then analyzed the data using Python and Google Colab, to look for patterns and find an appropriate repetition counting algorithm. You can view the notebook here.

The data looked like this:

You don’t need to be a genius to look at this chart and figure out how many squats a person performed. I’m sure that many algorithms and machine learning models would equally qualified. But this was a hackathon and I couldn’t afford this level of sophistication.

Instead, I implemented the most basic algorithm imaginable:

- Ignore all keypoints other than the nose.

- Record the lowest and the highest position of the nose.

- Count a repetition whenever the nose crosses the halfway point.

It worked with squats, push-ups, sit-ups, and pull-ups. I was satisfied.

Polishing the app

With the core functionality working and a few hours left before the demo, I decided to polish the app. I tweaked the colors, adjusted the layout, added audio feedback (“One!”, “Two!”, “Three!"), implemented some settings, designed an app icon, and published the APK. You can find the final product below:

Conclusion

Overall, the hackathon was a great experience. I was lucky enough to be selected as a finalist and I had the opportunity to demo my app to the judges. Next time, I’ll make sure to join a team.